In this post, I'll share how Kedro can help you to create data platforms that support a wide variety of use cases and profiles of data consumers. In my role as a data engineer, I found Kedro to reduce the learning curve and enable data science teams to extend and adapt existing projects as needed.

Introduction

A platform team defines patterns and practices that support consumers of their platform in developing and deploying their solutions. The goal is to reduce development time and provide substantial autonomy and ownership for production-grade deployment.

Platform teams are a well-known concept, introduced in the book Team Topologies by Matthew Skelton & Manuel Pais. Taking a definition from Evan Bottcher, from the book:

"A digital platform team is a foundation of self-service APIs, tools, services, knowledge and support witch are arranged as a compelling internal product. Autonomous delivery teams can make use of the platform to deliver product features at a higher pace, with reduced coordination."

Common challenges

Offering secure data consumption patterns and ensuring a dependable execution environment enables teams to concentrate on generating and capturing value. For this reason alone, creating a platform, often built on top of another platform like Cloud or Kubernetes, is a crucial step for any company.

A common challenge when dealing with AI in general is: How can I deploy this data pipeline or machine learning model without causing disruption or enduring lengthy implementation processes (typically managed by a distant team that is unaware of our specific objectives)?

Deploying a model forms the foundation of MLOps and involves various layers of support, from data ingestion to model traceability. Simply put, we aim to transition our code from development (sandbox) to production seamlessly, minimizing challenges and the need for extensive refactoring.

When I started my career as a Data Engineer a common scenario was the Data Science team were working on iteration 5 of a model, but in production we were running the first iteration still. To upgrade production, a developer had to translate a random forest algorithm from R to Java because application restrictions in production. I didn’t realize at the time but distancing the data science and Ops teams with limited communication between them is a classical bad configuration of MLOps.

Platform teams can provide standardized methods for monitoring and deploying ML solutions, employing techniques such as containers, logging, and Data API contracts. Simplifying these contracts for users, thereby reducing onboarding time and enhancing understanding of usage, is accomplished in part by maintaining updated documentation with the latest changes and requirements. When defining the platform, it's crucial to strike a balance between the inclination to develop from scratch and the option to adopt open-source or proprietary tools.

Kedro within a ML platform

Numerous posts and materials about Kedro can be found across the internet, such as within the official documentation tutorial, myself on youtube or the following callout.

What is Kedro?

Kedro is an open-source Python toolbox that applies software engineering principles to data science code. It makes it easier for a team to apply software engineering principles to data science code, which reduces the time spent rewriting data science experiments so that they are fit for production.

Kedro was born at QuantumBlack to solve the challenges faced regularly in data science projects and promote teamwork through standardised team workflows. It is now hosted by the LF AI & Data Foundation as an incubating project.

I won't delve into what Kedro is or isn't. Instead, I shall focus on the practical aspects of the framework and how it can benefit ML Platform Teams, offering it as a potential tool to empower rapid development and value capture.

Abstracting data integration and structural patterns

Accessing data storage poses a challenge for many teams. With Kedro, we have a straightforward method via the Data Catalog. Projects maintain a central datastore for all available data, with YAML configuration that can be integrated with a CI/CD process to enable validation of paths, ensure the latest data updates, monitor data drift, and more.

To expedite development, users can also access a range of Kedro project tools and starters to initiate the development of their AI solutions in a standardized manner (with the flexibility to extend and adapt as necessary).

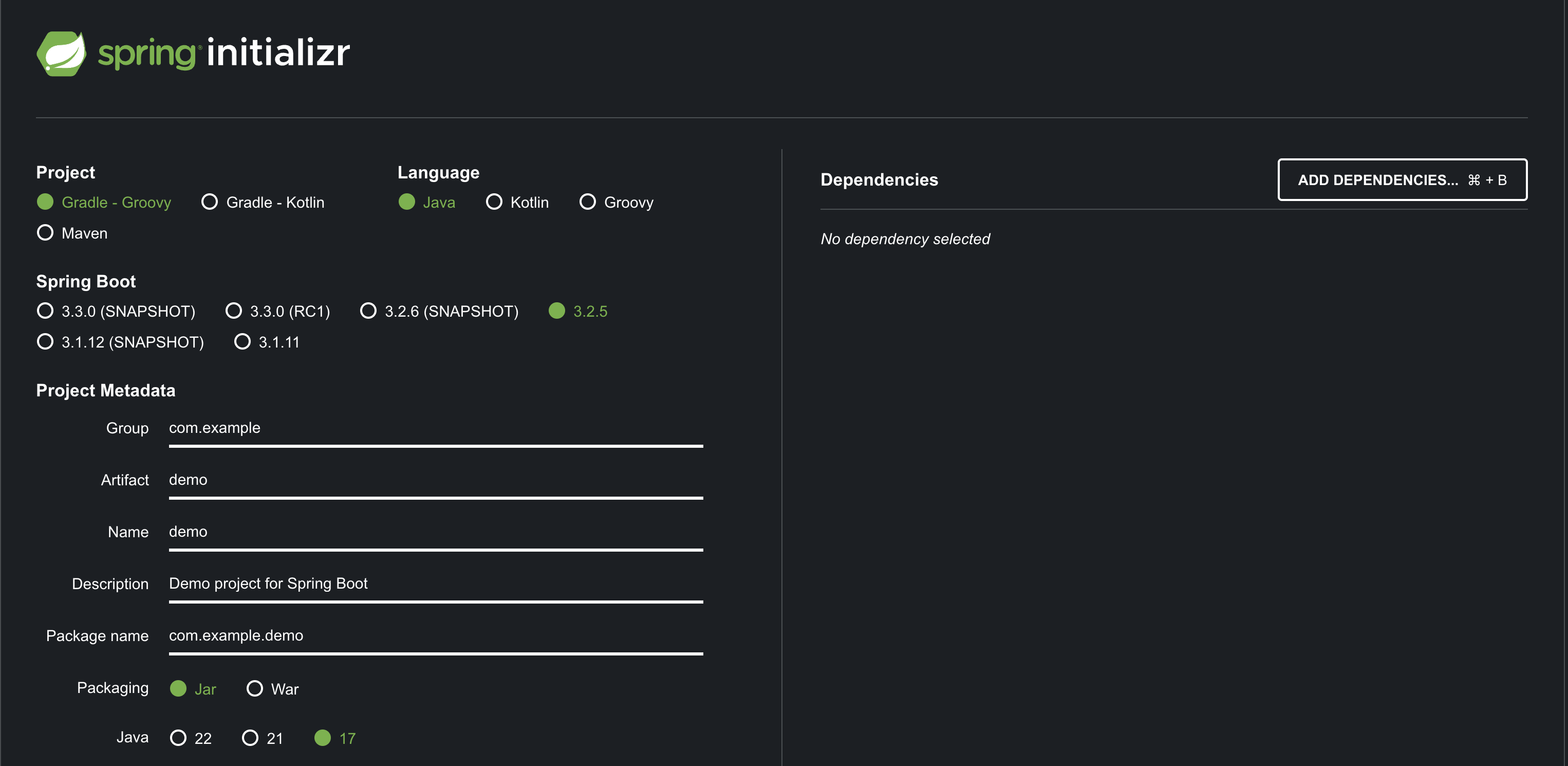

In more advanced scenarios, a Data Catalog can be dynamically created based on user data governance permissions and access controls. For instance, users may have access to something akin to Spring Boot Initialzr to launch new projects, using base configurations, selecting custom packages, and integrating with data governance platforms to display available data, among other features.

Masking dependencies complexity

Part of a team's autonomy is in the capacity to define tools/services. In ML this is commonly related to packages to run a model, new versions of pandas with fast interactions, or any other requirement. One way to encapsulate those dependencies, reducing errors in other services, is to manage via containers. Kedro is easily integrated with Docker via the Kedro Docker plugin. Again, this can be part of a CI/CD verification, to ensure users are following the base platform alignments.

Keeping documentation up to date

When developing custom applications, one important but time-consuming task is to create documentation. GenAI is here to help us already.

Maintain a semantic versioning contract, with compatibility between each of the deployed versions, and updated documentation, is a heavy task. According to Code Time Report, developers can take in average 40 mins per day working on tasks like documentation, reviewing code and PR review.

Kedro provides updated and user-friendly documentation, thanks to the contributions of the community and the efforts of maintainers. ML teams can concentrate on documenting project specifics and decisions, rather than explaining how the custom framework functions. This approach reduces the learning curve since Kedro employs common approaches like OOP, Dependency Injection, Facade, Observer, and others, making it easier to grasp the foundational concepts of the framework. Additionally, Platform Teams have the flexibility to extend and implement customized versions of Kedro, incorporating their own Datasets, Hooks, or any other integrations they've created.

Wrapping up

While not necessarily a technical requirement, managing a communication flow between teams that use a platform and the teams that create the platform, is really important. Constant feedback of what’s working well and what is not, can develop better products and solutions.

As you can see, Kedro is a complete framework that helps ML platform teams using it at scale to develop ML products.

What are the frameworks you are using inside your platform and how are you organizing it?

Recently on the Kedro blog

Recently published on the Kedro blog:

We’re always looking for collaborators to write about their experiences using Kedro. Get in touch with us on our Slack workspace to tell us your story!

A version of this post was originally posted on Medium by Carlos Barreto