In recent research, we found that Databricks is the dominant machine-learning platform used by Kedro users.

The purpose of the research was to identify any barriers to using Kedro with Databricks; we are collaborating with the Databricks team to create a prioritized list of opportunities to facilitate integration. For example, Kedro is best used with an IDE, but IDE support on Databricks is still evolving, so we are keen to understand the pain points that Kedro users face when combining it with Databricks.

Our research took qualitative data from 16 interviews, and quantitative data from a poll (140 participants) and a survey (46 participants) across the McKinsey and open-source Kedro user bases. We analysed two user journeys.

How to ensure a Kedro pipeline is available in a Databricks workspace

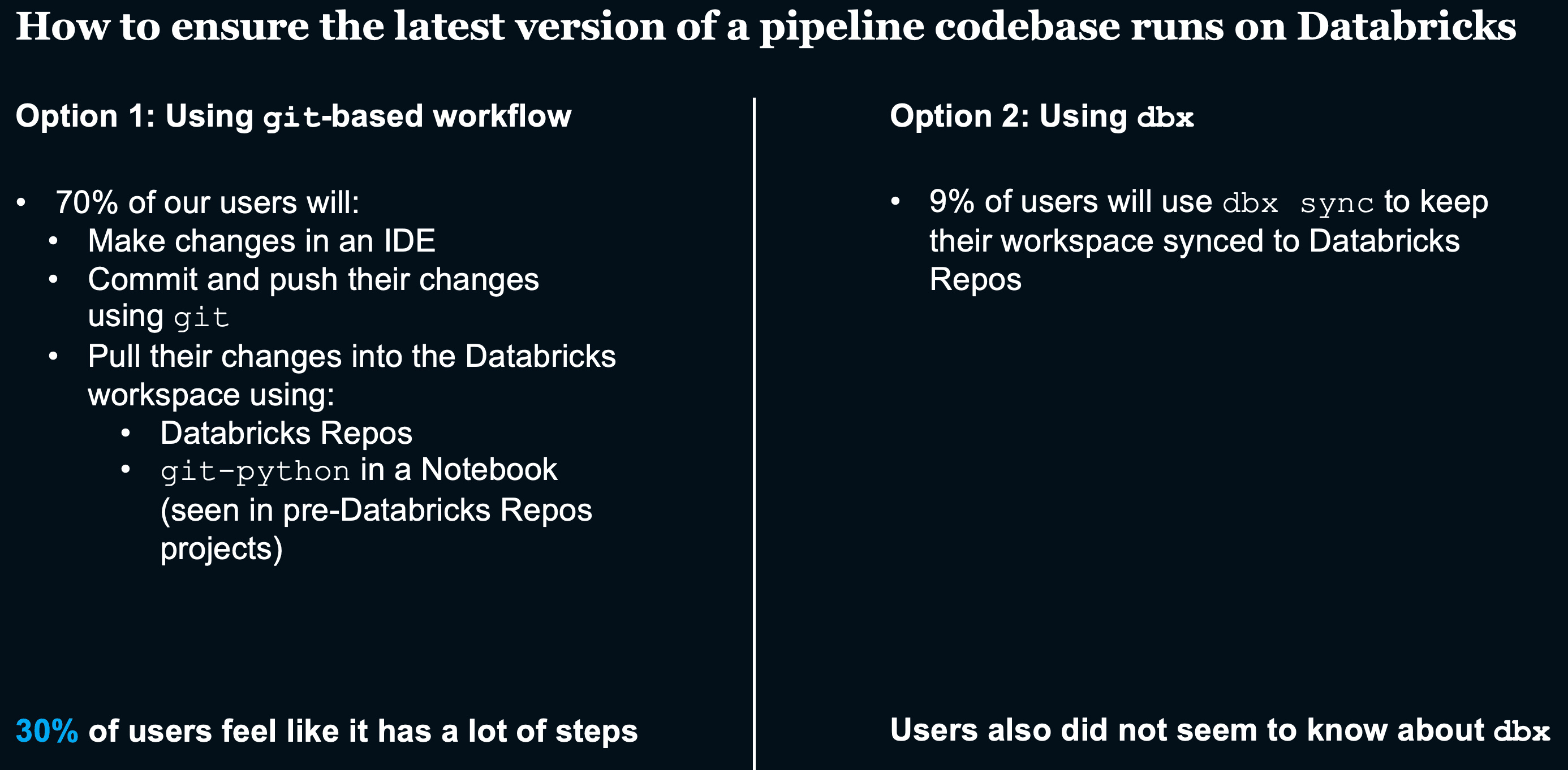

The first user journey we considered is how a user ensures the latest version of their pipeline codebase is available within the Databricks workspace. The most common workflow is to use Git, but almost a third of the users in our research set said there were a lot of steps to follow. The alternative workflow, which is to use dbx sync to Databricks repos, was used by less than 10% of the users we researched, indicating that awareness of this option is low.

How to ensure the latest version of a Kedro pipeline runs on Databricks

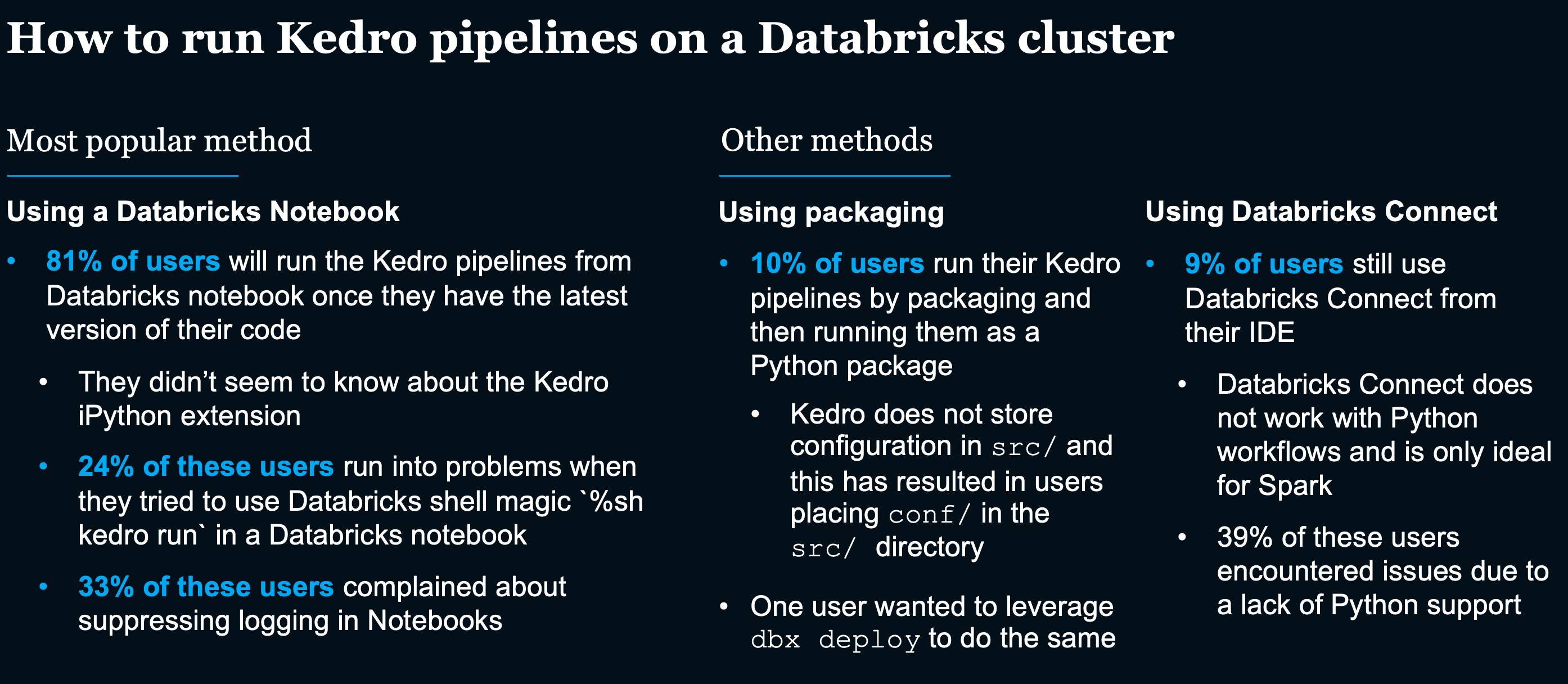

How to run Kedro pipelines using a Databricks cluster

The second user journey is how users run Kedro pipelines using a Databricks cluster. The most popular method, used by over 80% of participants in our research, is to use a Databricks notebook, which serves as an entry point to run Kedro pipelines. We discovered that many users were unaware of the IPython extension that significantly reduces the amount of code required to run Kedro pipelines in Databricks notebooks.

We also found that some users run their Kedro pipelines by packaging them and running the resulting Python package on Databricks. However, Kedro did not support the packaging of configurations until version 18.5, which has caused problems. The final option some users select is to use Databricks Connect, but this is not recommended since it is soon to be sunsetted by Databricks.

How to run a Kedro pipeline on a Databricks cluster.

The output of our research

To make it easier to pair Kedro and Databricks, we are updating Kedro’s documentation to cover the latest Databricks features and tools, particularly the development and deployment workflows for Kedro on Databricks with DBx. The goal is to help Kedro users take advantage of the benefits of working locally in an IDE and still deploy to Databricks with ease.

You can expect this new documentation to be released in the next one to two weeks.

We will also be creating a Kedro Databricks plugin or starter project template to automate the recommended steps in the documentation.

Coming soon…

We have a managed Delta table dataset available in our Kedro datasets repo, which will be available for public consumption soon. We are also planning to support managed MLflow on Databricks.

We have set up a milestone on GitHub so you can check in on our progress and contribute if you want to. To suggest features to us, report bugs, or just see what we’re working on right now, visit the Kedro projects on GitHub. We welcome every contribution, large or small.

Find out more about Kedro

There are many ways to learn more about Kedro:

Join our Slack organisation to reach out to us directly if you’ve a question or want to stay up to date with news. There's an archive of past conversations on Slack too.

Read our documentation or take a look at the Kedro source code on GitHub.

Check out our video course on YouTube.